|

|

|||||||||||

|

|

|||||||||||

|

Not a Free Lunch But a Box of ChocolatesA critique of William Dembski's book No Free LunchBy Richard WeinVersion 1.0 Permission is given to copy and print this page for non-profit personal or educational use. Contents

Summary

9. Conclusion Acknowledgements Appendix. Dembski's Statistical Method Examined Notes SummaryLife is like a box of chocolates. You never know what you're

gonna get. The aim of Dr William Dembski's book No Free Lunch is to demonstrate that design (the action of a conscious agent) was involved in the process of biological evolution. The following critique shows that his arguments are deeply flawed and have little to contribute to science or mathematics. To fully address Dembski's arguments has required a lengthy and sometimes technical article, so this summary is provided for the benefit of readers without the time to consider the arguments in full. Dembski has proposed a method of inference which, he claims, is a rigorous formulation of how we ordinarily recognize design. If we can show that an observed event or object has low probability of occurring under all the non-design hypotheses (explanations) we can think of, Dembski tells us to infer design. This method is purely eliminative--we are to infer design when we have rejected all the other hypotheses we can think of--and is commonly known as an argument from ignorance, or god-of-the-gaps argument. Because god-of-the-gaps arguments are almost universally recognized by scientists and philosophers of science to be invalid as scientific inferences, Dembski goes to great length to disguise the nature of his method. For example, he inserts a middleman called specified complexity: after rejecting all the non-design hypotheses we can think of, he tells us to infer that the object in question exhibits specified complexity, and then claims that specified complexity is a reliable indicator of design. The only biological object to which Dembski applies his method is the flagellum of the bacterium E. coli. First, he attempts to show that the flagellum could not have arisen by Darwinian evolution, appealing to a modified version of Michael Behe's argument from irreducible complexity. However Dembski's argument suffers from the same fundamental flaw as Behe's: he fails to allow for changes in the function of a biological system as it evolves. Since Dembski's method is supposed to be based on probability and he has promised readers of his earlier work a probability calculation, he proceeds to calculate a probability for the origin of the flagellum. But this calculation is based on the assumption that the flagellum arose suddenly, as an utterly random combination of proteins. The calculation is elaborate but totally irrelevant, since no evolutionary biologist proposes that complex biological systems appeared in this way. In fact, this is the same straw man assumption frequently made by Creationists in the past, and which has been likened to a Boeing 747 being assembled by a tornado blowing through a junkyard. This is all there is to Dembski's main argument. He then makes a secondary argument in which he attempts to show that even if complex biological systems did evolve by undirected evolution, they could have only done so if a designer had fine-tuned the fitness function or inserted complex specified information at the start of the process. The argument from fine-tuning of fitness functions appeals to a set of mathematical theorems called the "No Free Lunch" theorems. Although these theorems are perfectly sound, they do not have the implications which Dembski attributes to them. In fact they do not apply to biological evolution at all. All that is left of Dembski's argument is then the claim that life could only have evolved if the initial conditions of the Universe and the Earth were finely tuned for that purpose. This is an old argument, usually known as the argument from cosmological (and terrestrial) fine-tuning. Dembski has added nothing new to it. Complex specified information (CSI) is a concept of Dembski's own invention which is quite different from any form of information used by information theorists. Indeed, Dembski himself has berated his critics in the past for confusing CSI with other forms of information. This critique shows that CSI is equivocally defined and fails to characterize complex structures in the way that Dembski claims it does. On the basis of this flawed concept, he boldly proposes a new Law of Conservation of Information, which is shown here to be utterly baseless. Dembski claims to have made major contributions to the fields of statistics, information theory and thermodynamics. Yet his work has not been accepted by any experts in those fields, and has not been published in any relevant scholarly journals. No Free Lunch consists of a collection of tired old antievolutionist arguments: god-of-the-gaps, irreducible complexity, tornado in a junkyard, and cosmological fine-tuning. Dembski attempts to give these old arguments a new lease of life by concealing them behind veils of confusing terminology and unnecessary mathematical notation. The standard of scholarship is abysmally low, and the book is best regarded as pseudoscientific rhetoric aimed at an unwary public which may mistake Dembski's mathematical mumbo jumbo for academic erudition. 1. IntroductionIn the theater of confusion, knowing the location of the

exits is what counts. William Dembski's book No Free Lunch: Why Specified Complexity Cannot be Purchased without Intelligence1 is the latest of his many books and articles on inferring design in biology, and will probably play a central role in the promotion of Intelligent Design pseudoscience2 over the next few years. It is the most comprehensive exposition of his arguments to date. The purpose of the current critique is to provide a thorough critical examination of these arguments. Dembski himself has often complained that his critics have not fully engaged his arguments. I believe that complaint is unjustified, though I would agree that some earlier criticisms have been poorly aimed. This critique should lay to rest any such complaints. As in his previous work, Dembski defines his own terms poorly, gives new meanings to existing terms (usually without warning), and employs many of these terms equivocally. His assertions often appear to contradict one another. He introduces a great deal of unnecessary mathematical notation. Thus, much of this article will be taken up with the rather tedious chore of establishing just what Dembski's arguments and claims really mean. I have tried very hard to find charitable interpretations, but there are often none to be found. I have also requested clarifications from Dembski himself, but none have been forthcoming. Some time ago, I posted a critique3 of Dembski's earlier book, The Design Inference,4 to the online Metaviews forum, to which he contributes, pointing out the fundamental ambiguities in his arguments. His only response was to call me an "Internet stalker" while refusing to address the issues I raised, on the grounds that "the Internet is an unreliable forum for settling technical issues in statistics and the philosophy of science".5 He clearly read my critique, however, since he now acknowledges me as having contributed to his work (p. xxiv). While some of the ambiguities I drew attention to in that earlier critique have been resolved in his present volume, others have remained and many new ones have been added. Some readers may dislike the frankly contemptuous tone that I have adopted towards Dembski's work. Critics of Intelligent Design pseudoscience are faced with a dilemma. If they discuss it in polite, academic terms, the Intelligent Design propagandists use this as evidence that their arguments are receiving serious attention from scholars, suggesting this implies there must be some merit in their arguments. If critics simply ignore Intelligent Design arguments, the propagandists imply this is because critics cannot answer them. My solution to this dilemma is to thoroughly refute the arguments, while making it clear that I do so without according those arguments any respect at all. This critique assumes a basic knowledge of mathematics, probability theory and evolutionary theory on the part of the reader. In order to simplify some of my arguments, I have relegated many details to endnotes, which can be reached by numbered links. In some cases, assertions which are not substantiated in the body of the text are supported by arguments in endnotes. Citations consisting merely of page numbers refer to pages in No Free Lunch. Regrettably, some older browsers are unable to display a number of mathematical symbols which are used in this article. Netscape 4 is one of these. 2. Design and NatureIn spring, when woods are getting green, For a book which is all about inferring design, it is surprising to discover that No Free Lunch does not clearly define the term. Design is equated with intelligent agency, but that term is not defined either. It is also described negatively, as the complement of necessity (deterministic processes) and chance (stochastic processes). However, deterministic and stochastic processes are themselves normally defined as mutually exhaustive complements: those processes which do not involve any uncertainty and those which do. So it is not clear what, if anything, remains after the exclusion of those two categories. Dembski associates design with the actions of animals, human beings and deities, but seems to deny the label to the actions of computers, no matter how innovative their output may be. What distinguishes an animal mind, say, from a computer? Obviously there are many physical differences. But why should the actions of one be considered design and not the other? The only explanation I can think of is that one is conscious and the other, presumably, is not. I conclude that, when he infers design, Dembski means that a conscious mind was involved. It appears that Dembski considers consciousness to be a very special kind of process, which cannot be attributed to physical laws. He tells us that intelligent design is not a mechanistic explanation (pp. 330-331). Dembski would certainly not be alone in this view, though it is not at all clear what it means for a process to be non-mechanistic. It appears, however, that such a process is outside the realm of cause and effect. This raises all sorts of difficult philosophical questions, which I will not attempt to consider here. Even if we accept that non-mechanistic processes exist, Dembski gives us no reason to think that consciousness (or intelligent design) is the only possible type of non-mechanistic process. Yet he seems to assume this to be the case. Even with this interpretation, we still run into a problem. In his Caputo example (p. 55), Dembski uses his design inference to distinguish between two possible explanations both involving the actions of a conscious being: either Caputo drew the ballots fairly or he cheated. Dembski considers only the second of these alternatives to be design. But both explanations involve a conscious agent. It could be said that, if Caputo drew fairly, he was merely mimicking the action of a mechanistic device, so this doesn't count. But that would raise the question of just what a mechanistic device is capable of doing. Is a sophisticated computer not capable of cheating? Indeed, is there any action of a human mind which cannot, in principle, be mimicked by a sufficiently sophisticated computer? If not, how can we tell the difference between conscious design and a computer mimicking design? Even if you doubt that in principle a computer could mimic all the actions of a human mind, consider whether it could mimic the actions of a rat, which Dembski also considers to be an intelligent agent capable of design (pp. 29-30). To escape this dilemma, Dembski invokes the concept of derived intentionality: the output of a computer can "exhibit design", but the design was performed by the creator of the computer and not by the computer itself (pp. 223, 326). Whenever a phenomenon exhibits design, there must be a designer (a conscious mind, in my interpretation) somewhere in the causal chain of events leading to that phenomenon. Dembski claims that contemporary science rejects design as a legitimate mode of explanation (p. 3). But he himself gives examples of scientists making inferences involving human agency, such as the inference by archaeologists that certain stones are arrowheads made by early humans (p. 71), and he labels these "design inferences". Is he claiming that such archaeologists are mavericks operating outside the bounds of mainstream science? I don't think so. I think that what Dembski really means to claim here is that contemporary science does not allow explanations involving non-mechanistic processes, and he is projecting his own belief that design is a non-mechanistic process onto contemporary science. But even if it's true that science does not allow explanations involving non-mechanistic processes, it certainly does allow the action of a mind to be inferred where no judgement need be made as to whether mental processes are mechanistic or not (and such a judgement is generally unnecessary). An alternative interpretation of Dembski's claim might be that contemporary science rejects design as a legitimate mode of explanation in accounting for the origin of biological organisms. If this is what he means, then I reject the claim. If we were to discover the remains of an ancient alien civilization with detailed records of how the aliens manipulated the evolution of organisms, then I think that mainstream science would have little difficulty accepting this as evidence of design in biological organisms. The word natural has been the source of much confusion in the debate over Intelligent Design. It has two distinct meanings: one is the complement of artificial, i.e. involving intelligent agency; the other is the complement of supernatural. Dembski tells us that he will use the word in the former sense: "...I am placing natural causes in contradistinction to intelligent causes" (p. xiii). He then goes on to say that contemporary science is wedded to a principle of methodological naturalism:

But the methodological naturalism on which most scientists insist requires only the rejection of supernatural explanations, not explanations involving intelligent agency. Indeed, we have just seen that contemporary science allows explanations involving human designers and, I argue, intelligent alien beings. Perhaps what Dembski really means is that methodological naturalism rejects the invocation of an "unembodied designer" (to use his term).6 Dembski introduces the term chance hypothesis to describe proposed explanations which rely entirely on natural causes. This includes processes comprising elements of both chance and necessity (p.15), as well as purely deterministic processes. It may seem odd to refer to purely deterministic hypotheses as chance hypotheses, but Dembski tells us that "necessity can be viewed as a special case of chance in which the probability distribution governing necessity collapses all probabilities either to zero or one" (p.71). Since Dembski defines design as the complement of chance and necessity, it follows that a chance hypothesis could equally well (and with greater clarity) be called a non-design hypothesis. And since he defines natural causes as the complement of design, we can also refer to chance hypotheses as natural hypotheses. Dembski's use of the term chance hypothesis has caused considerable confusion in the past, as many people have taken chance to mean purely random, i.e. all outcomes being equally probable. While Dembski's usage has been clarified in No Free Lunch, I believe it still has the potential to confuse. For the sake of consistency with Dembski's work, I will generally use the term chance hypothesis, but I will switch to the synonym natural hypothesis or non-design hypothesis when I think this will increase clarity. 3. The Chance-Elimination MethodIgnorance, Madam, pure ignorance. In Chapter 2 of No Free Lunch, Dembski describes a method of inferring design based on what he calls the Generic Chance Elimination Argument. I'll refer to this method as the chance-elimination method. This method assumes that we have observed an event, and wish to determine whether any design was involved in that event. The chance-elimination method is eliminative--it relies on rejecting chance hypotheses. Dembski gives two methods for eliminating chance hypotheses: a statistical method for eliminating individual chance hypotheses, and proscriptive generalizations, for eliminating whole categories of chance hypotheses. 3.1 Dembski's Statistical Method The fundamental intuition behind Dembski's statistical method is this: we

have observed a particular event (outcome) E and wish to check whether a given

chance hypothesis H provides a reasonable explanation for this outcome.7 We

select an appropriate rejection region (a set of potential outcomes) R,

where E is in R, and calculate the probability of observing an outcome in this

rejection region given that H is true, i.e. P(R| It is important to note that we need to combine the probabilities of all outcomes in an appropriate rejection region, and not just take the probability of the particular outcome observed, because outcomes can individually have small probabilities without their occurrence being significant. A rejection region which is appropriate for use in this way is said to be detachable from the observed outcome, and a description of a detachable rejection region is called a specification (though Dembski often uses the terms rejection region and specification interchangeably). Consider Dembski's favourite example, the Caputo case (pp. 55-58). A Democrat politician, Nicholas Caputo, was responsible for making random draws to determine the order in which the two parties (Democrat and Republican) would be listed on ballot papers. Occupying the top place on the ballot paper was known to give the party an advantage in the election, and it was observed that in 40 out of 41 draws Caputo drew a Democrat to occupy this favoured position. In 1985 it was alleged that Caputo had deliberately manipulated the draws in order to give his own party an unfair advantage. The court which considered the allegation against Caputo noted that the probability of picking his own party 40 out of 41 times was less than 1 in 50 billion, and concluded that "confronted with these odds, few persons of reason will accept the explanation of blind chance."8 In conducting his own analysis of this event, Dembski arrives at the same probability as did the court, and explains the reasoning behind his conclusion. The chance hypothesis H which he considers is that Caputo made the draws fairly, with each party (D and R) having a 1/2 probability of being selected for the top place on each occasion. Suppose that we had observed a typical sequence of 41 draws, such as the following: DRRDRDRRDDDRDRDDRDRRDRRDRRRDRRRDRDDDRDRDD The probability of this precise sequence occurring, given H, is extremely small: (1/2)41 = 4.55 × 10-13. However, unless that particular sequence had been predicted in advance, we would not consider the outcome at all exceptional, despite its low probability, since it was very likely that some such random looking sequence would occur. The historical sequence, on the other hand, contained just one R, and so looked something like this: DDDDDDDDDDDDDDDDDDDDDDRDDDDDDDDDDDDDDDDDD The second sequence (call it E) has exactly the same probability as the first one, i.e. P(E|H) = 4.55 × 10-13, but this time we would consider it exceptional, because the probability of observing so many Ds is extremely small. Any outcome showing as many Ds as this (40 or more Ds out of 41 draws) would have been considered at least as exceptional, so the probability we are interested in is the probability of observing 40 or more Ds. "40 or more Ds", then, is our specification, and, as it happens, there are 42 different sequences matching this specification, so P(R|H) = 42 × P(E|H) = 1.91 × 10-11, or about 1 in 50 billion. In other words, the probability we are interested in here is not the probability of the exact sequence we observed, but the probability of observing some outcome matching the specification. If we decide that this probability is small enough, we reject H, i.e. we infer that Caputo's draws were not fair. From now on, I will use the expression "small probability" to mean "probability below an appropriate probability bound". In order to apply Dembski's method, we need to know how to select an appropriate specification and probability bound. Dembski expounds at length a set rules for selecting these parameters, but they can be boiled down to the following:

Although I believe Dembski's statistical method is seriously flawed, the issue is not important to my refutation of Dembski's design inference. For the remainder of the main body of this critique, therefore, I will assume for the sake of argument that the method is valid. A discussion of the flaws will be left to an appendix. It is worth noting, however, that this method has not been published in any professional journal of statistics and appears not to have been recognized by any other statistician. 3.2 Proscriptive Generalizations Dembski argues that we can eliminate whole categories of chance hypotheses by means of proscriptive generalizations. For example, he mentions the second law of thermodynamics, which proscribes the possibility of a perpetual motion machine. He describes the logic of such generalizations in terms of mathematical invariants (p. 274), though this adds absolutely nothing to his argument. I accept that proscriptive generalizations can sometimes be made, and Dembski is welcome to use them to eliminate specific categories of chance hypotheses. But there is no proscriptive generalization that can rule out all chance hypotheses. Furthermore, his claim to have found a proscriptive generalization against Darwinian evolution of irreducibly complex systems is hollow (see 4.2 below). 3.3 The Argument From Ignorance The conclusion of the Generic Chance Elimination Argument (step #8) is stated by Dembski as follows:

{Hi} is the set of all chance hypotheses which we believe "could have been operating to produce E" (p.72). Dembski also writes:

So, when we have eliminated all the chance hypotheses we can think of, we infer that the event was highly improbable with respect to all known causal mechanisms, and we call this specified complexity. Later Dembski tells us that an inference of specified complexity should lead inevitably to an inference of design. This being the case, it's not clear that the notion of specified complexity is serving any useful purpose here. Why not cut out the middleman and go straight from the Generic Chance Elimination Argument to design? Unfortunately, the introduction of this middleman does serve to cause considerable confusion, because Dembski equivocates between this sense of specified complexity and the sense assigned by his uniform-probability method of inference (which I will explain in section 6). To help clear up the confusion, I will refer to this middleman sense as eliminative specified complexity and to the other sense as uniform-probability specified complexity. Note that Dembski's specified complexity is not a quantity: an event simply exhibits specified complexity or it doesn't. Thus we see that the chance-elimination method is purely eliminative. It tells us to infer design when we have ruled out all the chance (i.e. non-design) hypotheses we can think of. The design hypothesis says nothing whatsoever about the identity, nature, aims, capabilities or methods of the designer. It just says, in effect, "a designer did it".10 This type of argument is commonly known as an argument from ignorance or god-of-the-gaps argument. So there is no danger of misunderstanding, let me clarify that the accusation of argument from ignorance is not an assertion that those making the argument are ignorant of the facts, or even that they are failing to utilize the available facts. The proponents of an argument from ignorance are demanding that their explanation be accepted just because the scientific community is ignorant (at least partially) of how an event occurred, rather than because their own explanation has been shown to be a good one. Note that an argument from scientific ignorance differs from the deductive fallacy of argument from ignorance. The deductive fallacy takes the following form: "My proposition has not been proven false, so it must be true." The scientific argument from ignorance is not a deductive fallacy, because scientific inferences are not deductive arguments. A god-of-the-gaps argument is an argument from ignorance in which the default hypothesis, to be accepted when no alternative hypothesis is available, is "God did it". Since Dembski tells us that his criterion only infers the action of an unknown designer, and not necessarily a divine one, the term designer-of-the-gaps might be more appropriate here, but I think it is reasonable to use the more familiar term, since the arguments follow the same eliminative pattern and Dembski has made it clear that the designer he has in mind is the Christian God. The god-of-the-gaps argument should not be confused with a god-of-the-gaps theology. The latter proposes that God's actions are restricted to those areas of which we lack knowledge, but does not offer this as an argument for the existence of God. Dembski makes no good case for awarding such a privileged status to the design hypothesis. Why should we prefer "an unknown designer did it" to "unknown natural causes did it" or "we don't know what did it"? Furthermore, as we shall see, he tells us to accept design by elimination even when we do have some outline ideas for how natural causes might have done it. 3.4 Dembski's Responses to the Charge of Argument From Ignorance Since arguments from ignorance are almost universally rejected as unsound by scientists and philosophers of science, Dembski is sensitive to the charge, but his attempts to avoid facing up to the obvious are mere evasions.

Dembski is misconstruing the charge of argument from ignorance. It is not a question of how much knowledge we have utilized. Scientific knowledge is always incomplete. The chance-elimination method is purely eliminative because it makes no attempt to consider the merits of the design hypothesis, but merely relies on eliminating the available alternatives.

Dembski's phrase "exhaustive with respect to the inquiry in question" is the sort of circumlocution in which he excels. It just means that the set is as exhaustive as we can make it. In other words, it's a fancy way to say we have eliminated all the chance hypotheses we could think of.

This is a clear argument from ignorance. Unless design skeptics can propose an explicit natural explanation, Dembski tells us, we should infer design.

Dembski is not being criticized for failing to eliminate all possible chance hypotheses, but for adopting a purely eliminative method in the first place.

Yes, these design inferences are fallible, as are all scientific inferences. That is not the issue. The difference is that these inferences are not purely eliminative. The experts in question have in mind a particular type of intelligent designer (human beings) of which they know much about the abilities and motivations. They can therefore compare the merits of such an explanation with the merits of other explanations. If Dembski wishes to defend god-of-the-gaps arguments as a legitimate mode of scientific inference, he is welcome to try. What is less welcome are his attempts to disguise his method as something more palatable. 3.5 Comparative and Eliminative Inferences One way in which Dembski attempts to defend his method is to suggest that there is no viable alternative. The obvious alternative, however, is to consider all available hypotheses, including design hypotheses, on their merits, and then select the best of them. This is the position adopted by almost all philosophers of science, although they disagree on how to evaluate the merits of hypotheses. There seems no reason to treat inferences involving intelligent agents differently in this respect from other scientific inferences. Dembski argues at some length against the legitimacy of comparative approaches to inference (pp. 101-110, 121n59). I will not address the specifics of the likelihood approach, on which he concentrates his fire. I leave that to its proponents. However, his rejection of comparative inferences altogether is clearly untenable. When we have two or more plausible hypotheses available--whether those involve intelligent agents or not--we must use some comparative method to decide between them. Consider, for example, the case of the archaeologists who make inferences about whether flints are arrowheads made by early humans or naturally occurring pieces of rock. Let us take a borderline case, in which a panel of archaeologists is divided about whether a given flint, taken from a site inhabited by early humans, is an arrowhead. Now suppose that the same panel had been shown the same flint but told that it came from a location which has never been inhabited by flint-using humans, say Antarctica. The archaeologists would now be much more inclined to doubt that the flint was man-made, and more inclined to attribute it to natural causes. A smaller proportion (perhaps none at all) would now infer design. The inference of design, then, was clearly influenced by factors affecting the plausibility of the design hypothesis: whether or not flint-using humans were known to have lived in the area. The inference was not based solely on the elimination of natural hypotheses. It is not my intention to argue for any particular method of comparing hypotheses. Philosophers of science have proposed a number of comparative approaches, usually involving some combination of the following criteria:

Other criteria often cited include explanatory power, track record, scope, coherence and elegance. In opposing comparative methods, Dembski argues that hypotheses can be eliminated in isolation without there necessarily being a superior competitor. In practical terms, I agree, although I suspect that we would not eliminate a hypothesis unless we had in the back of our minds that there existed a plausible possibility of a better explanation. I do not deny that we can eliminate a hypothesis without having a better one in mind; I deny that we can accept a hypothesis without having considered its merits, as Dembski would have us do in the case of his design hypothesis. If all the available hypotheses score too badly according to our criteria, it may be best to reject all of them and just say "we don't know". 3.6 Reliability and Counterexamples Dembski argues, on the basis of an inductive inference, that the chance-elimination method is reliable:

Setting aside the question of whether such an induction would be justified if its premise were true, let's just consider whether or not the premise is true. Contrary to Dembski's assertion, his section 1.6 did not show anything of the sort. In fact, the only cases where we know that Dembski's method has been used to infer design are the two examples that Dembski himself describes: the Caputo case and the bacterial flagellum. And in neither of these cases has design been independently established. Dembski wants us to believe that his method of inference is basically the same method already used in our everyday and scientific inferences of design. I have already argued that this is untrue. But even if we suppose, for the sake of argument, that our typical design inferences are indeed based on the sort of purely eliminative approach proposed by Dembski, then it is not difficult to find counterexamples, in which design was wrongly inferred because of ignorance of the true natural cause:

Perhaps Dembski would object that his claim ("in all cases where we know the causal history and where specified complexity was involved, an intelligence was involved as well") was only referring to cases where we observe specified complexity today. But, by definition, those are cases where we don't have a plausible natural explanation. If we had one, we would not infer specified complexity. If we know the causal history and it was not a natural cause, it must have been design. So, if this is what Dembski means, his claim is a tautology. It says that, whenever the cause is known to be design, the cause is design! You cannot make an inductive inference from a tautology. It would do Dembski no good to claim that these are cases of derived design (see 6.1 below), e.g. that mushrooms and the solar system were originally designed. The chance-elimination method infers design in the particular event which is alleged to have small probability of occurring under natural causes. For example, in the case of the flagellum, Dembski claims that design was involved in the origin of the flagellum itself, and not just indirectly in terms of the Earth or the Universe having been designed. The chance-elimination method is initially introduced in a simplified form called the Explanatory Filter. The criterion for the filter to recognize design is labelled the complexity-specification criterion. Unfortunately, the use of this simplified account has caused considerable confusion in the past, because it possesses two misleading features:

Although Dembski has made some attempts to clarify the situation in No Free Lunch, his continued use of the Explanatory Filter in its highly misleading form is inexplicable. And the misdirection is not limited to the Explanatory Filter itself. It occurs elsewhere too, in statements such as this:

4. Applying the Method to NatureHe uses statistics as a drunken man uses lampposts--for

support rather than illumination. It has been several years since Dembski first claimed to have detected design in biology by applying his method of inference. Yet until the publication of No Free Lunch, he had never provided or cited the details of any such application. Critics were therefore looking forward to seeing the long-promised probability calculation that would support the claim. While I, for one, did not expect a convincing calculation, even I was amazed to discover that Dembski has offered us nothing but a variant on the old Creationist "tornado in a junkyard"14 straw man, namely the probability of a biological structure occurring by purely random combination of components. The only biological structure to which Dembski applies his method is the flagellum of the bacterium E. coli. As his method requires him to start by determining the set {Hi}of all chance hypotheses which "could have been operating to produce E [the observed outcome]" (p. 72), one might expect an explicit identification of the chance hypothesis under consideration. Dembski provides no such explicit identification, and the reader is left to infer it from the details of the calculation. Perhaps the reason Dembski failed to identify his chance hypothesis is that, when clearly named, it is so transparently a straw man. No biologist proposes that the flagellum appeared by purely random combination of proteins--they believe it evolved by natural selection--and all would agree that the probability of appearance by random combination is so minuscule that this is unsatisfying as a scientific explanation. Therefore for Dembski to provide a probability calculation based on this absurd scenario is a waste of time. There is no need to consider whether Dembski's calculation is correct, because it is totally irrelevant to the issue. Nevertheless, since Dembski does not state clearly that he has based his calculation on a hypothesis of purely random combination, I will describe the calculation briefly in order to demonstrate that this is the case. Dembski tells us to multiply three partial probabilities to arrive at the probability of a "discrete combinatorial object": pdco = porig × plocal × pconfig

Each of these probabilities individually is below Dembski's universal probability bound, so he does not proceed to multiply them. Incidentally, Dembski errs in choosing to calculate a formation probability for the flagellum itself. He should have considered the formation of the DNA to code for a flagellum. If a flagellum appeared without the DNA to code for it, it would not be inherited by the next generation of bacteria, and so would be lost. In order to justify his failure to calculate the probability of the flagellum arising by Darwinian evolution, Dembski invokes the notion of irreducible complexity, which, he argues, provides a proscriptive generalization against Darwinian evolution of the flagellum. Irreducible complexity was introduced into the Intelligent Design argument by biochemist Michael Behe. The subject has been addressed in great detail elsewhere, so I will not repeat all the objections.15 However, I would like to draw attention to a point which some readers of Behe have overlooked. Behe divided potential Darwinian pathways for the evolution of an irreducibly complex (hereafter IC) system into two categories: direct and indirect.16 The direct pathways are those in which a system evolves purely by the addition of several new parts that provide no advantage to the system until all are in place. All other potential pathways are referred to as indirect. Behe then argues that IC systems cannot evolve via direct pathways. But his direct pathways exclude two vital elements of the evolutionary process: (a) the evolution of individual parts of a system; and (b) the changing of a system's function over time, so that, even though a given part may have contributed nothing to the system's current function until the other parts were in place, it may well have contributed to a previous function. When it comes to indirect pathways, Behe has nothing but an argument from ignorance: no one has given a detailed account of such a pathway. The truth of this assertion has been contested, but it depends on just how much detail is demanded. Behe demands a great deal. He then asserts that the evolution of an IC system by indirect pathways is extremely improbable, but he has provided no argument to support this claim. It is merely his intuition.17 Dembski repeats the claim that the problem of explaining the evolution of IC molecular systems has "proven utterly intractable" (p. 246), but evolutionary explanations have now been proposed for several of the systems cited by Behe, including the blood-clotting cascade, the immune system, the complement system and the bacterial flagellum. The last of these is highly speculative, but is sufficient to refute the claim of utter intractability.18 What then has Dembski added to the debate over irreducible complexity? First, he has attempted to counter the objections of Behe's critics. I won't comment on these except to say that some of these critics appear to have misunderstood what Behe meant by irreducible complexity. This is unsurprising since his definition was vague and was accompanied by several misleading statements. Indeed, Behe himself has admitted that his definition was ambiguous.19 He has even tentatively proposed a completely new definition.20 Second, Dembski has proposed a new definition of his own, making three major changes:

The last of these changes is sure to create yet more confusion. It is no longer enough, according to Dembski, to show that a system is IC. It must also meet the two additional criteria. Yet, elsewhere in his book, Dembski continues to refer to irreducible complexity as a sufficient condition for inferring design:

I can understand the temptation to use irreducibly complex as a shorthand term for irreducibly complex with an irreducible core which has numerous and diverse parts and exhibits minimal complexity and function, but Dembski should really have introduced a new term for the latter. From now on, when claiming to have found an example of irreducible complexity in nature, Intelligent Design proponents should specify which of the following definitions they have in mind: Behe's original definition; Behe's corrected version of his original definition; Behe's proposed new definition; Dembski's definition; or Dembski's definition plus the two additional criteria. I predict most will fail to do so. For the remainder of this article, I will use the term IC in the last of these senses. It should not be assumed that all the examples of IC systems offered by Behe necessarily meet Dembski's criteria. Dembski considers only the bacterial flagellum. Whether Behe's other example systems are IC in this new sense remains to be established. Let us accept, for the sake of argument,

that Dembski's definition is tight enough to ensure that IC systems cannot

evolve by direct pathways. What has he said on the vital subject that

Behe failed to address--the subject of indirect pathways? The answer is

nothing. The crux of his argument is this:

But there is indeed an option that Dembski has overlooked. The system could have evolved from a simpler system with a different function. In that case there could be functional intermediates after all. Dembski's mistake is to assume that the only possible functional intermediates are intermediates having the same function. Dembski's failure to consider the possibility of a change of function is seen in his definition of irreducible complexity:

There is no reason why a system's basic function should be its original one. The concepts of basic function and original function may not even be well-defined. If a system performs two vital functions, which is the basic one? The concept of an original function assumes there is an identifiable time at which the system came into existence. But the system may have a long history in which parts have come and gone, and functions have changed, making it impossible to trace back the origin of the system to one particular time. And what is a system? If two proteins start to interact in a beneficial way , do they immediately become a system? If so, we may have to trace the history of a system all the way back to the time when one it was just two interacting proteins. There is a tendency among antievolutionists to think of biological systems as if they were like man-made machines, in which the system and its parts have been designed for one specific function and are difficult to modify for another function. But biological systems are much more flexible and dynamic than man-made ones. A few other points are worth noting:

Before finishing this section, it might be useful to clear up a few more red herrings which Dembski introduces into his discussion of irreducible complexity.

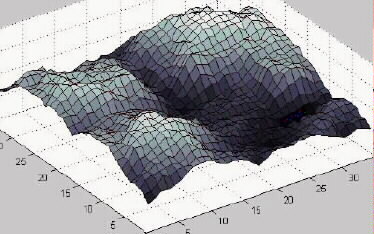

5. Evolutionary AlgorithmsAttempt the end, and never stand to doubt; In recent years there has been a considerable growth of interest in evolutionary algorithms, executed on computers, as a means for solving optimization problems. As the name suggests, evolutionary algorithms are based on the same underlying principles as biological evolution: reproduction with random variations, and selection of the "fittest". Since they appear to demonstrate how unguided processes can produce the sort of functional complexity21 that we see in biology, they are a problem which Dembski needs to address. In addition, he tries to turn the subject to his advantage, by appealing to a set of mathematical theorems, known as the No Free Lunch theorems, which place constraints on the problem-solving abilities of evolutionary algorithms. 5.1 Black-Box Optimization Algorithms We will be concerned here with a type of algorithm know as a black-box optimization (or search) algorithm. Such algorithms include evolutionary algorithms, but are not limited to them. The problems which black-box optimization algorithms solve have just two defining attributes: a phase space, and a fitness function defined over that phase space. In the context of these algorithms, phase spaces are usually called search spaces. Also the term fitness function is usually reserved for evolutionary algorithms, the more general term being objective function or cost function (maximizing an objective function is equivalent to minimizing a cost function). But I will adopt Dembski's terminology for the sake of consistency. The phase space is the set of all potential solutions to the problem. It is generally a multidimensional space, with one dimension for each variable parameter in the solution. Most real optimization problems have many parameters, but, for ease of understanding, it is helpful to think of a two-dimensional phase space--one with two parameters--which can be visualized as a horizontal plane. The fitness function is a function over this phase space; in other words, for every point (potential solution) in the phase space the fitness function tells us the fitness value of that point. We can visualize the fitness function as a three-dimensional landscape where the height of a point represents its fitness (figure 1). Points on hills represent better solutions while points in valleys represent poorer ones. The terms fitness function and fitness landscape are used interchangeably. Figure 1. A Fitness Landscape An optimization algorithm is, broadly speaking, an algorithm for finding high points in the landscape. Being a black-box algorithm means that it has no knowledge about the problem it is trying to solve other than the underlying structure of the phase space and the values of the fitness function at the points it has already visited. The algorithm visits a sequence of points (x1, x2, ..., xm), evaluating the fitness, f(xi), of each one in turn before deciding which point to visit next. The algorithm may be stochastic, i.e. it may incorporate a random element in its decisions. Evaluating the fitness function is typically a very computation-intensive process, possibly involving a simulation. For example, if we are trying to optimize the design of a road network, we might want the algorithm to run a simulation of daily traffic for each possible design that it considers. The performance of the algorithm is therefore measured in terms of the number of fitness function evaluations (m) needed to reach a given level of fitness, or the level of fitness reached after a given number of function evaluations. Each function evaluation can be thought of as a time step, so we can think in terms of the level of fitness reached in a given time. Note that we are interested in the best fitness value found throughout the whole time period, and not just the fitness of the last point visited. There are three types of optimization algorithm of interest to us here:

Dembski adopts a very broad definition of evolutionary algorithm which includes all the optimization algorithms which we consider here, including random search (pp. 180, 229n9, 232n31). Another term used by Dembski is blind search. He uses it in two senses. First it means a random walk, an algorithm which moves from one location in the phase space to another location selected randomly from nearby points (p. 190). Later he uses it to mean any search in which the fitness function has only two possible values: the point being evaluated either is or is not in a target area (p. 197). The usual (though not exclusive) meaning of blind search in the literature of evolutionary algorithms is as a synonym for black-box algorithm.22 5.2 Fine-Tuning the Fitness Function Dembski recognizes that evolutionary algorithms can produce quite innovative results, but he argues that they can only do so because their fitness function has been fine-tuned by the programmer. In doing so, he alleges, the programmer has "smuggled" complex specified information or specified complexity into the result. (These two terms will be discussed later.)

A similar claim is made regarding biological evolution:

These claims are based on a fundamental misconception of the role of the fitness function in an evolutionary algorithm. A fitness function incorporates two elements:

In general, then, the fitness function defines the problem to be solved, not the way to solve it, and it therefore makes little sense to talk about the programmer fine-tuning the fitness function in order to solve the problem. True, there may be some aspects of the problem which are unknown, or where the programmer decides, for practical reasons, to simplify his model of the problem. Here the programmer could make decisions in such a way as to improve the performance of the algorithm. But there is no reason to think that this makes a significant contribution to the success of evolutionary algorithms. In one of his articles, Dembski quotes evolutionary psychologist Geoffrey Miller in support of his claim that the fitness function needs to be fine-tuned:

But the engineer's goals, constraints, trade-offs, etc, are parameters of the problem to be solved. They must be carefully chosen to ensure that the evolutionary algorithm addresses the right problem, not to guide it to the solution of a given problem, as Miller tells us in the preceding paragraph:

It is other elements of the evolutionary algorithm which may have to be carefully selected if the algorithm is to perform well:

A similar point is made by Wolpert and Macready:

Perhaps Dembski's confusion on this subject can be explained by his obsession with Richard Dawkins' Weasel program,26 to which he devotes a large part of his chapter on evolutionary algorithms. In that example, invented only to illustrate one specific point, the fitness function was indeed chosen in order to help the algorithm converge on the solution. That program, however, was not created to solve an optimization problem. The program had a specific target point, unlike real optimization algorithms, where the solution is unknown. In the case of biological evolution, the situation is somewhat different, because the evolutionary parameters themselves evolve over the course of evolution. For example, according to evolutionary theory, the genetic code has evolved by natural selection. It is therefore not just good luck that the genetic code is so suited to evolution. It has evolved to be that way. When Dembski talks about fine-tuning of the fitness function for biological evolution, what he really means is fine-tuning of the cosmological and terrestrial initial conditions, including the laws of physics. When these conditions are a given, as they are for practical purposes, they contribute to determining the fitness function. But Dembski argues that these conditions must have been selected from a set of alternative possibilities in order to make the evolution of life possible. When considered in this way, alternative sets of initial conditions should properly be considered as elements in another phase space, and not as part of the fitness function. Dembski sometimes refers to this as a phase space of fitness functions. One can understand what he means by this, but it is potentially confusing, not least because the fitness functions for biological organisms are not fixed, but evolve as their environment evolves. We see then that Dembski's argument from fine-tuning of fitness functions is just a disguised version of the well-known argument from fine-tuning of cosmological and terrestrial initial conditions.27 Dembski lists a catalogue of cosmological and terrestrial conditions which need to be just right for the origin of life (pp. 210-211). This argument is an old one, and I won't address it here. The only new twist that Dembski gives to it is to cast the argument in terms of fitness functions and appeal to the No Free Lunch theorems for support. That appeal will be considered below, but first I want to make a couple of observations. Dembski's two conclusions cannot both be true. On the one hand he is arguing that the initial conditions were fine-tuned to make natural evolution of life possible. On the other hand, he is arguing that natural evolution of life wasn't possible. Not that there's anything wrong with Dembski having two bites at the cherry. If one argument fails, he can fall back on the other. Alternatively Dembski might argue that the cosmic designer made the Universe almost right for the natural evolution of life, but left himself with a little work to do later. If Dembski believes that the initial conditions for evolution were designed, the obvious thing to do would be to try applying his chance-elimination method to the origin of those conditions. I note that he doesn't attempt to do so. 5.3 The No Free Lunch Theorems Dembski attempts to use the No Free Lunch theorems (hereafter NFL) of David Wolpert and William Macready28 to support his claim that fitness functions need to be fine-tuned. He presumably considers NFL important to his case, since he names his book after it. However, I will show that NFL is not applicable to biological evolution, and even for those evolutionary algorithms to which it does apply, it does not support the fine-tuning claim. I'll start by giving a brief explanation of what NFL says, making a number of simplifications and omitting details which need not concern us here. NFL applies only to algorithms meeting the following conditions:

The same algorithm can be used with any problem, i.e. on any fitness landscape, though it won't be efficient on all of them. In terms of a computer program, we can imagine inserting various alternative fitness function modules into the program. We can also imagine the set of all possible fitness functions. This is the vast set consisting of every possible shape of landscape over our given phase space. If there are S points in the phase space and F possible values of the fitness function, then the total number of possible fitness functions is FS, since each point can have any of F values, and we must allow for every possible permutation over the S points. We are now in a position to understand what NFL says. Suppose we take an algorithm a1, measure its performance on every fitness function in that vast set of possible fitness functions, and take the average over all those performance values. Then we repeat this for any other algorithm a2. NFL tells us that the average performance will be the same for both algorithms, regardless of which pair of algorithms we selected. Since it is true for all pairs of algorithms, and since random search is one of these algorithms, this means that no algorithm is any better (or worse) than a random search, when averaged over all possible fitness functions. It even means that, averaged over all possible fitness functions, a hill-descending algorithm will be just as good as a hill-climbing algorithm at finding high points! (A hill-descender is like a hill-climber except that it moves to the lowest of the available points instead of the highest.) This result seems incredible, but it really is true. The important thing to remember is the vital phrase "averaged over the set of all possible fitness functions". The vast majority of fitness functions in that set are totally chaotic, with the height of any two adjacent points being unrelated. Only a minuscule number of those fitness functions have the smooth rolling hills and valleys that we usually associate with a "landscape". In a chaotic landscape, there are no hills worthy of the name to be climbed. Furthermore, remember that every point which a hill-climber or descender peeks at counts as having been "found", even if the algorithm decides not to move there. So if a hill-descender happens to move adjacent to a very tall spike, the fitness value at that spike will be recorded and will count in the descender's final performance evaluation. A landscape picked at random from the set of all possible fitness functions will almost certainly be just a random mass of spikes (figure 2).

Figure 2. A Random Fitness "Landscape" We can already see that the relevance of NFL to real problems is limited. The fitness landscapes of real problems are not this chaotic. This fact has been noted by a number of researchers:

5.4 The Irrelevance of NFL to Dembski's Arguments NFL is not applicable to biological evolution, because biological evolution cannot be represented by any algorithm which satisfies the conditions given above. Unlike simpler evolutionary algorithms, where reproductive success is determined by a comparison of the innate fitness of different individuals, reproductive success in nature is determined by all the contingent events occurring in the lives of the individuals. The fitness function cannot take these events into account, because they depend on interactions with the rest of the population and therefore on the characteristics of other organisms which are also changing under the influence of the algorithm. In other words, the fitness function of biological organisms changes over time in response to changes in the population (of the same species and of other species), violating the final condition listed above. The same also applies to any non-biological simulations in which the individuals interact with each other, such as the competing checkers-playing neural nets which are discussed below. It would do no good to suggest that the interactions between individuals could be modelled within the optimization algorithm, rather than in the fitness function. This is prevented by the black-box constraint, which stops the optimization algorithm having direct access to information about the environment. This is similar to the problem of coevolving fitness landscapes raised by Stuart Kauffman (p. 224-227). Dembski's response to Kauffman, however, does not address my argument. Nothing that Dembski writes (p. 226) changes the fact that, in biological evolution, the fitness function at a given time cannot be determined independently of the state of the population, and therefore NFL does not apply.31 Moreover, NFL is hardly relevant to Dembski's argument even for the simpler, non-interactive evolutionary algorithms to which it does apply (those where the reproductive success of individuals is determined by a comparison of their innate fitness). NFL tells us that, out of the set of all mathematically possible fitness functions, there is only a tiny proportion on which evolutionary algorithms perform as well as they are observed to do in practice. From this, Dembski argues that it would be incredibly fortuitous for a suitable fitness function to occur without fine-tuning by a designer. But the alternative to design is not purely random selection from the set of all mathematically possible fitness functions. Fitness functions are determined by rules, not generated randomly. In the real world, these rules are the physical laws of the Universe. In a computer model, they can be whatever rules the programmer chooses, but, if the model is a simulation of reality, they will be based to some degree on real physical laws. Rules inevitably give rise to patterns, so that patterned fitness functions will be favoured over totally chaotic ones. If the rules are reasonably regular, we would expect the fitness landscape to be reasonably smooth. In fact, physical laws generally are regular, in the sense that they correspond to continuous mathematical functions, like "F = ma", "E = mc2", etc. With these functions, a small change of input leads to a small change of output. So, when fitness is determined by a combination of such laws, it's reasonable to expect that a small movement in the phase space will generally lead to a reasonably small change in the fitness value, i.e. that the fitness landscape will be smooth. On the other hand, we expect there to be exceptions, because chaos theory and catastrophe theory tell us that even smooth laws can give rise to discontinuities. But real phase spaces have many dimensions. If movement in some dimensions is blocked by discontinuities, there may still be smooth contours in other dimensions. While many potential mutations are catastrophic, many others are not. Dembski might then argue that this only displaces the problem, and that we are incredibly lucky that the Universe has regular laws. Certainly, there would be no life if the Universe did not have reasonably regular laws. But this is obvious, and is not specifically a consequence of NFL. This argument reduces to just a variant of the cosmological fine-tuning argument, and a particularly weak one at that, since the "choice" to have regular laws rather than chaotic ones is hardly a very "fine" one. Although it undermines Dembski's argument from NFL, the regularity of laws is not sufficient to ensure that real-world evolution will produce functional complexity. Dembski gives one laboratory example where replicating molecules became simpler (the Spiegelman experiment, p. 209). But it does not follow that this is always so. Dembski has not established any general rule. I would suggest that, because the phase space of biological evolution is so massively multidimensional, we should not be surprised that it has produced enormous functional complexity. 6. The Uniform-Probability MethodOperator... Give me Information. Dembski tells us that he has two different arguments for design in nature. As well as attempting to show that there exist in nature phenomena which Darwinian evolution does not have the ability to generate (such as the bacterial flagellum), Dembski also deploys another argument. Even if Darwinian evolution did have that ability, he argues, it could only have it by virtue of there having been design involved in the selection of the initial conditions underlying evolution.

This is an argument for what I will call derived design. (Dembski uses the term derived intentionality.) It does not argue for design in a particular event (such as evolution of some structure), but merely argues that design must have been involved at some point or other in the causal chain of events leading to some phenomenon that we observe. We have already seen this argument cast in terms of fine-tuning of fitness functions. Dembski also casts it in terms of specified complexity. Earlier, specified complexity was introduced as something to be inferred when we had eliminated all the natural hypotheses we could think of to explain an event. But now Dembski is telling us that, even if we cannot eliminate Darwinian evolution as an explanation, we should still make an inference of derived design if we observe specified complexity. Clearly, then, this is a different meaning of specified complexity. This new meaning is an observed property of a phenomenon, not an inferred property of an event. It indicates that the phenomenon has a complex (in a special sense) configuration. This property also goes by the name of complex specified information (CSI). Note that this is another purely eliminative method; it infers design from the claimed absence of any natural process capable of generating CSI. If the claim were true, it could be considered a proscriptive generalization, but we will see that the claim has no basis whatsoever. 6.2 Complex Specified Information (CSI) Dembski devises his own measure of how complex a phenomenon's configuration is, and calls it specified information (which I'll abbreviate to SI). He calculates this measure by choosing a specification (as described in 3.1 above) and then calculating the probability of an outcome matching that specification as if the phenomenon was generated by a process having a uniform probability distribution. A uniform probability distribution is one in which all possible outcomes (i.e. configurations) have the same probability, and Dembski calculates the SI on this basis even if the phenomenon in question is known not to have been generated by such a process. (This will be considered more carefully in the next section.) The probability calculated in this way is then converted into "information" by applying the function I(R) = -log2P(R), i.e. the information is the negation of the logarithm (base 2) of the probability, and he refers to the resulting measure as a number of bits. If the SI of a phenomenon exceeds a universal complexity bound of 500 bits, then Dembski says that the phenomenon exhibits complex specified information (or CSI).32 The universal complexity bound is obtained directly from Dembski's universal probability bound of 10-150, since -log2(10-150) is approximately 500. Dembski also refers to CSI as specified complexity, using the two terms interchangeably. As noted above, this meaning of specified complexity is different from the one we encountered earlier. I'll call it uniform-probability specified complexity. To be clear: eliminative specified complexity is an inferred attribute of an event, indicating that we believe the event was highly improbable with respect to all known causal mechanisms; uniform-probability specified complexity (or CSI) is an observed attribute of a phenomenon, indicating that the phenomenon has a "complex" configuration, without regard to how it came into existence. We will see that Dembski's notion of "complexity" is very different from our normal understanding of the word. 6.3 Evidence For The Uniform-Probability Interpretation The fact that the probability used to calculate SI is always based on a uniform probability distribution is extremely important. Dembski uses a uniform (or "purely random") distribution even if the phenomenon is known to have been caused by a process having some other probability distribution. Since this is not explicitly stated by Dembski, and may seem surprising, I will present several items of evidence to justify my interpretation.

Although the evidence is inconclusive, it seems to predominantly favour the uniform-probability interpretation, and that is the one that I will consider hereafter. But let me briefly look at the alternatives:

If Dembski insists that he has only one method of design inference and that it's the chance-elimination method, then he needs to explain away the exhibits above and justify his introduction of the terms "complexity" and "information". The chance-elimination method uses a statistical (probabilistic) technique for eliminating hypotheses. This has nothing to do with complexity or information. Transforming probabilities by applying the trivial function I = -log2P does not magically convert them into complexity or information measures. It only serves to obfuscate the nature of the argument. My guess is that Dembski has failed to notice that he has two different methods. One reason for his confusion may be that all the chance hypotheses he ever considers in his examples are ones which give rise to a uniform probability distribution, with the sole exception of one trivial case (p. 70). Dembski also seems to consider uniform probability distributions "privileged" in some sense (p. 50). Referring to the phase space of an optimization algorithm, he writes:

But a phase space (as the search space of an optimization algorithm) does not come with a probability distribution attached. It is simply a space of possible solutions which we are interested in searching.34 Although basing SI on a uniform probability distribution helps to make it independent of the causal process which produced the phenomenon, it cannot make it completely independent. Given a phase space (or reference class of possibilities, as Dembski calls it), there is only one possible uniform distribution, the one in which all outcomes have equal probability. But how do we choose a phase space? In the case of the SETI sequence it may seem obvious that the relevant phase space is the space of all possible bit sequences of length 1126. But why should we assume that the sequence was drawn from a space of 1126-bit sequences, and not sequences of variable length? Why should we assume that beat and pause were the only two possible values? The supposed extraterrestrials could have chosen to transmit beats of varying amplitudes. Similar problems arise elsewhere. On p. 166, Dembski calculates the complexity of the word METHINKS as -log2(1/278) = 38 bits.35 This is based on a phase space of strings of 8 characters where each character has 27 possibilities (26 letters in the alphabet plus a space). He does not consider the possibility of more or fewer than 8 characters. So clearly Dembski has a rule that we should only consider possible permutations of the same number of characters (or components) as we actually observed. No justification is given for such a rule, and it still leaves us with an arbitrary choice to make regarding the unit of permutation. In the case of METHINKS, it might be argued that the only sensible unit of permutation is the character. But in other cases we have to make a choice. In a sentence, should we consider permutations of characters or of words? In a genome, should we consider permutations of genes, codons, base pairs or atoms? Even more problematical is the range of possible characters. Here Dembski has chosen the 26 capital letters and a space as the only possibilities. But why not include lower case letters, numerals, punctuation marks, mathematical symbols, Greek letters, etc? Just because we didn't observe any of those, that doesn't mean they weren't real possibilities. And if we are to consider only the values that we actually observed, why did Dembski include all 26 letters of the alphabet? Many of those letters were not observed in the word METHINKS or even in the longer sentence in which it was embedded. Perhaps Dembski is relying on knowledge of the causal process which gave rise to the word, knowing that the letters were drawn from a collection of 27 Scrabble tiles, say. But to rely on knowledge of the causal process makes the criterion useless for those cases where we don't know the cause. And the whole purpose of the criterion was to enable us to infer the type of causal process (natural or design) when it is unknown. The selection of an appropriate phase space is not such a problem in standard Shannon information theory,36 because we are concerned there with measuring the information transmitted (or produced) by a process. Dembski, on the other hand, wants to measure the information exhibited by a given phenomenon, and from that make an inference about the causal process which produced the phenomenon. This requires him to choose a phase space without knowing the causal process, the result being that his phase spaces are arbitrary. Dembski devotes a section of his book (pp. 133-137) to the problem of selecting a phase space, but fails to resolve the problem. He argues that we should "err on the side of abundance and include as many possibilities as might plausibly obtain within that context" (p. 136). But this means that we err on the side of overestimating the amount of information exhibited by a phenomenon, and so err on the side of falsely inferring design. This is hardly reasonable for a method which is supposed to infer design reliably. In any case, Dembski doesn't follow his own advice. We just saw in the METHINKS example that he chose a space based on only 27 characters--hardly the maximum plausible number given no knowledge of the causal process. Given that SI is based on a uniform probability distribution, there is little doubt that CSI exists in nature. Indeed, all sorts of natural phenomena can be found to exhibit CSI if a suitable phase space is chosen. Take the example mentioned earlier of craters on the Moon. If we take the space of all theoretically possible lunar landscapes and randomly pick a landscape from a uniform distribution over that space, then the probability of obtaining a landscape exhibiting such circular formations as we actually observe on the Moon is extremely small, easily small enough to conclude that the actual lunar landscape exhibits CSI. Even the orbits of the planets exhibit CSI. If a planetary orbit were randomly picked from a uniform probability distribution over the space of all possible ways of tracing a path around the Sun, then the probability of obtaining such a smooth elliptical path as a planetary orbit is minuscule. 6.5 The Law of Conservation of Information The Law of Conservation of Information (hereafter LCI) is Dembski's formalized statement of his claim that natural causes cannot generate CSI; they can only shuffle it from one place to another. The LCI states that, if a set of conditions Y is sufficient to cause X, then the SI exhibited by Y and X combined cannot exceed the SI of Y alone by more than the universal complexity bound, i.e. I(Y&X) <= I(Y) + 500 where I(Y) is the amount of SI exhibited by Y. (I have made Y antecedent to X, rather than the other way around, for the sake of consistency with Dembski's explanation on pages 162-163.) Despite its name, the LCI is not a conservation law. Since Dembski acknowledges that small amounts of SI (less than 500 bits) can be generated by chance processes (pp. 155-156), the LCI cannot be construed as a conservation law in any reasonable sense of the term. It is, rather, a limit on how much SI can be generated. My discussion of the LCI below will be based on my uniform-probability interpretation of SI. However, in case Dembski rejects this interpretation, let me first consider what the LCI would mean if SI is based on the true probabilities of events. It would then be just a disguised version of Dembski's old Law of Small Probabilities, from The Design Inference, which states that specified events of small probability (less than 10-150) do not occur. He has merely converted probability to "information" by applying the function I = -log2P to each side of an inequality, with the probabilities conditioned on the occurrence of Y. To see this, let X be a specified event which has occurred as a result of Y. Then, by the Law of Small Probabilities,

In this case, the LCI is just a probability limit and has nothing to do with information or complexity in any real sense. I will therefore not consider this interpretation any further. 6.6 Counterexample: Checkers-Playing Neural Nets My first counterexample to the LCI is one which Dembski bravely introduces, namely the evolving checkers-playing neural nets of Chellapilla and Fogel (pp. 221-223).37 I'll start with a brief description of the evolutionary algorithm. Neural nets were defined by a set of parameters (the details are unimportant) which determined their strategy for playing checkers. At the start of the program run, a population of 15 neural nets was created with random parameters. They had no special structures corresponding to any principles of checkers strategy. They were given just the location, number and types of pieces--the same basic information that a novice player would have on his/her first game. In each generation, the current population of 15 neural nets spawned 15 offspring, with random variations on their parameters. The resulting 30 neural nets then played a tournament, with each neural net playing 5 games as red (moving first) against randomly selected opponents. The neural nets were awarded +1 point for a win, 0 for a draw and -2 for a loss. Then the 15 neural nets with the highest total scores went through to the next generation. I'll refer to the (+1, 0, -2) triplet as the scoring regime, and to the survival of the 15 neural nets with the highest total score as the survival criterion. The neural nets produced by this algorithm were very good checkers players, and Dembski assumes that they exhibited specified complexity (CSI). He gives no justification for this assumption, but it seems reasonable given the uniform-probability interpretation. Presumably the specification here is, broadly speaking, the production of a good checkers player, and the phase space is the space of all possible values of a neural net's parameters. If the parameters were drawn randomly, the probability of obtaining a good checkers player would be extremely low. Since the output of the program exhibited CSI, Dembski needs to show that there was CSI in the input. To his credit, Dembski doesn't take the easy way out and claim that the CSI was in the computer or in the program as a whole. The programming of the neural nets was quite independent of the evolutionary algorithm. Instead Dembski claims that the CSI was "inserted" by Chellapilla and Fogel as a consequence of their decision to keep the "criterion of winning" constant from one generation to the next! But a constant criterion is the simplest option, not a complex one, and the idea that such a straightforward decision could have inserted a lot of information is absurd. As we saw earlier, the fitness function reflects the problem to be solved. In this case, the problem is to produce neural nets which will play good checkers under the prevailing conditions. Since the conditions under which the evolved neural nets would be playing were (presumably) unknown at the time the algorithm was programmed, it might be argued that the choice of winning criterion was a free one. The programmers could therefore have chosen any criterion they liked. Nevertheless, the natural choice in such a situation is to choose the simplest option. In choosing a constant winning criterion, that is what the programmers did. Since they had no reason to think the neural nets would find themselves in a tournament with variable winning conditions, there was no reason to evolve them under such conditions. Contrary to Dembski's assertion that the choice of a constant criterion "is without a natural analogue", the natural analogue of the constant winning criterion is the constancy of the laws of physics and logic.

It's not clear what Dembski means by "the criterion for tournament victory". However, the fact that a biological system's success depends on who is "playing in the tournament" certainly does have an analogue in Chellapilla and Fogel's algorithm. The success of a neural net was dependent on which other neural nets were playing in the tournament. Before considering some further objections to Dembski's claim, I need to decide what he means by "criterion of winning". Does he mean just the scoring regime? Or does he mean the entire set of tournament rules: the selection of opponents, the scoring regime and the survival criterion? For brevity, I will consider only the scoring regime, but similar arguments can be made in respect of the other elements of the tournament rules. Dembski insists that the SI "inserted" by Chellapilla and Fogel's choice is determined with respect to "the space of all possible combinations of local fitness functions from which they chose their coordinated set of local fitness functions". It's not clear what Dembski means by fitness functions here. As we've seen, in a situation where the success of an individual depends on its interactions with other individuals in the population (in this case the population of neural nets), the fitness function varies as the population varies, since the fitness of an individual is relative to its environment, which includes the rest of the population. Dembski seems to recognize this, since he writes: